Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence | HTML

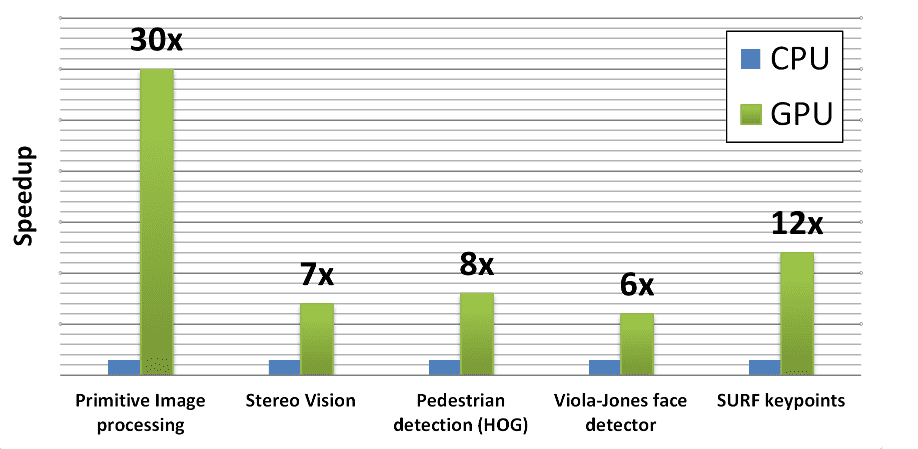

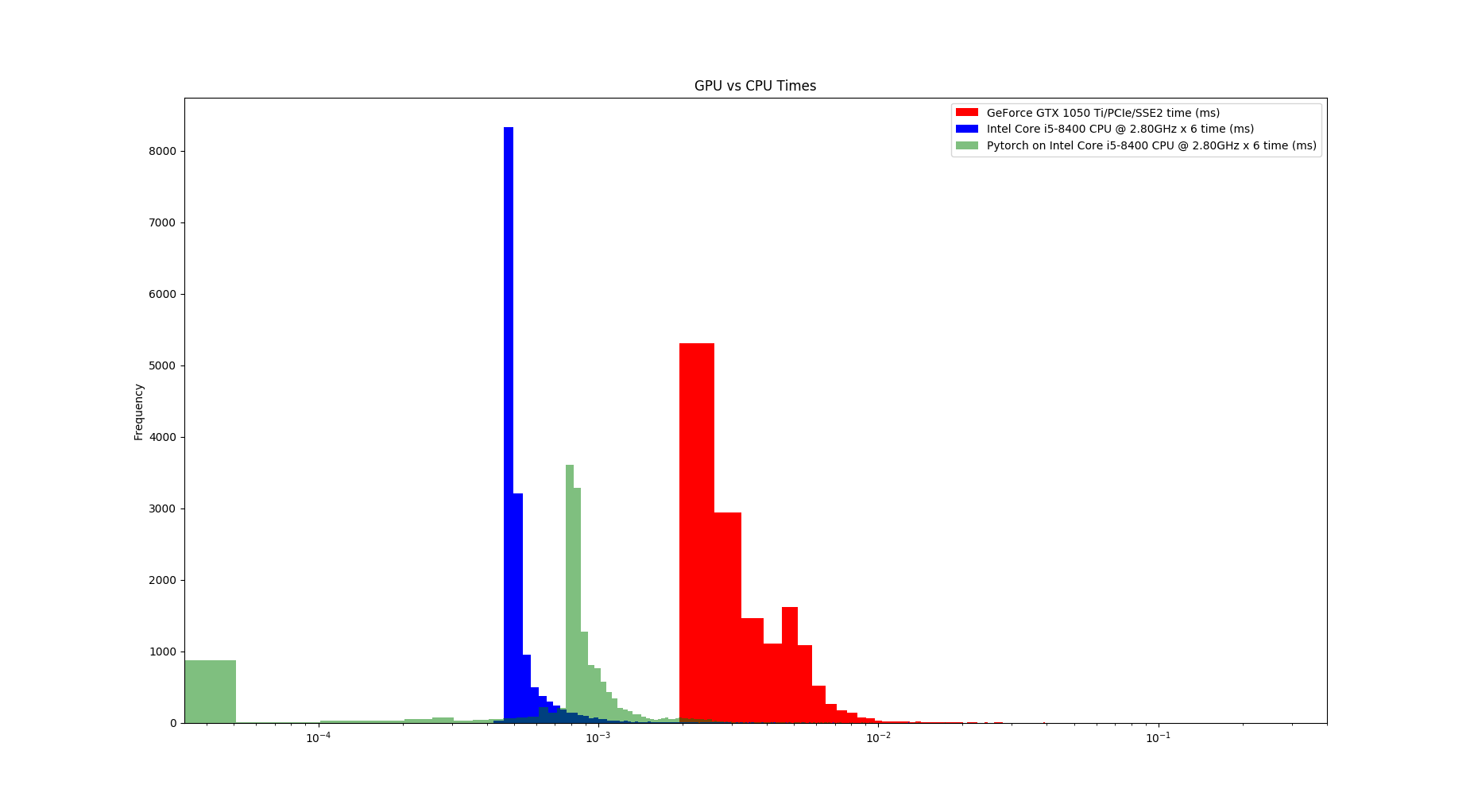

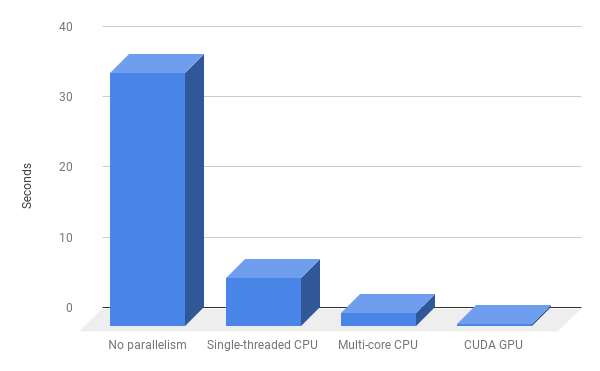

How GPU Computing literally saved me at work? | by Abhishek Mungoli | Walmart Global Tech Blog | Medium

Hands-On GPU Programming with Python and CUDA: Explore high-performance parallel computing with CUDA: Tuomanen, Dr. Brian: 9781788993913: Books - Amazon

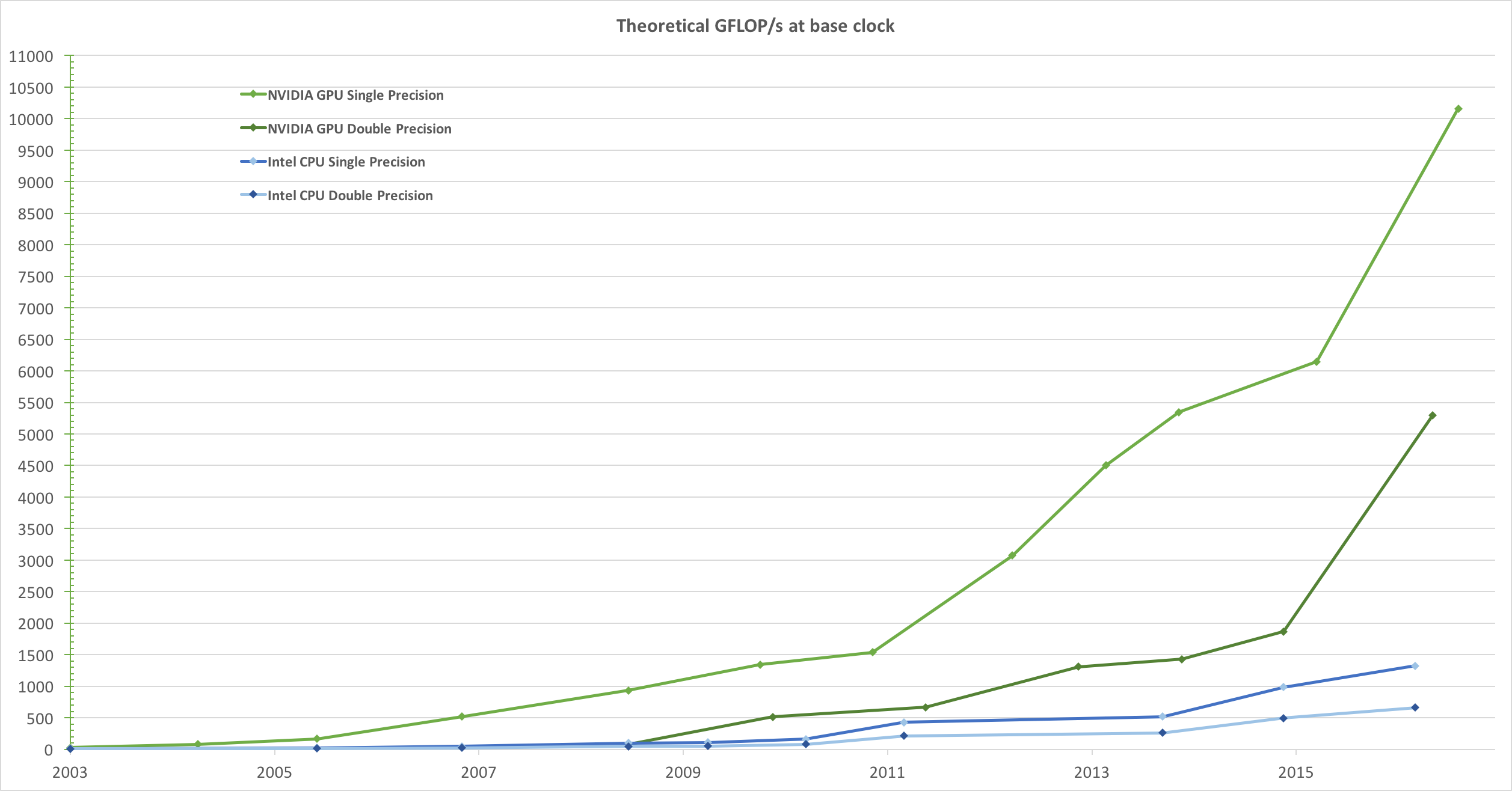

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

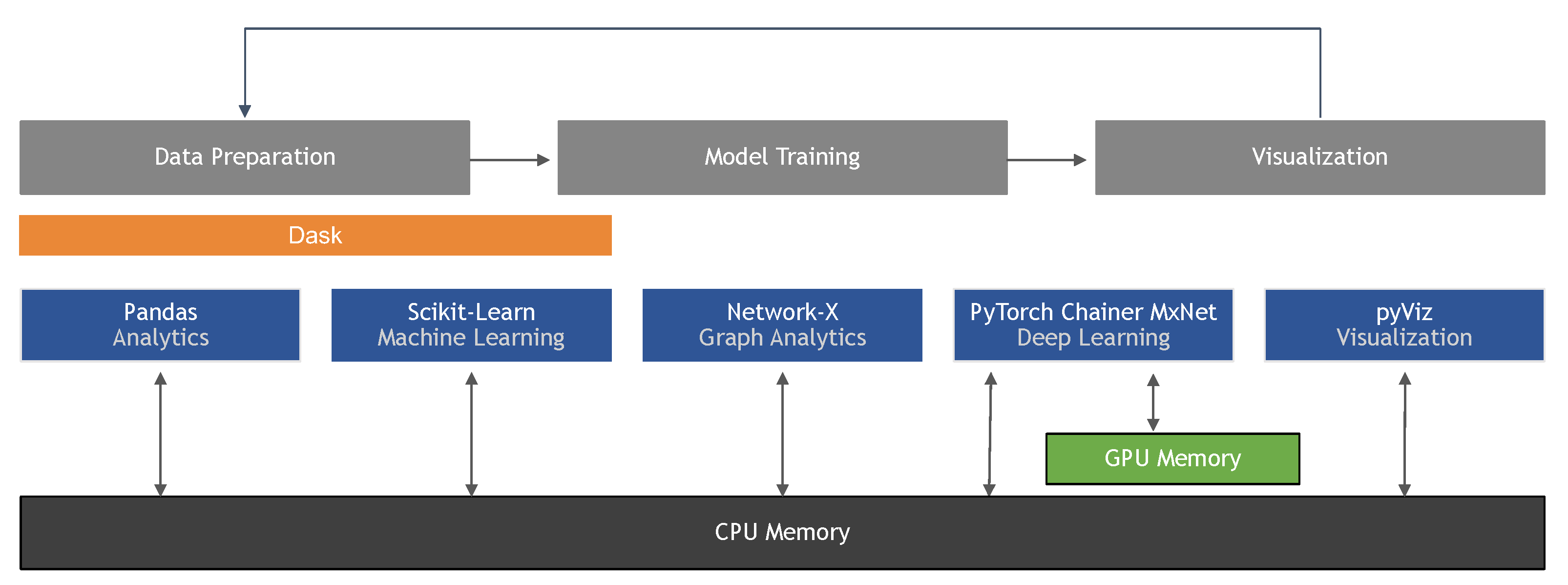

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

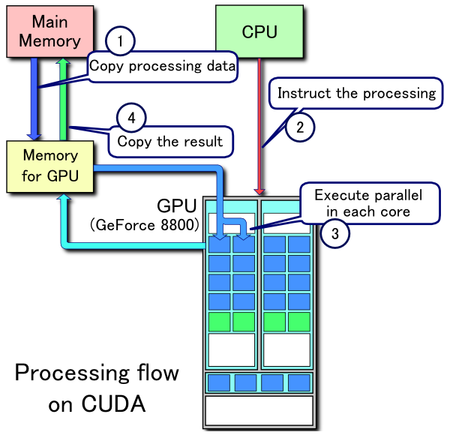

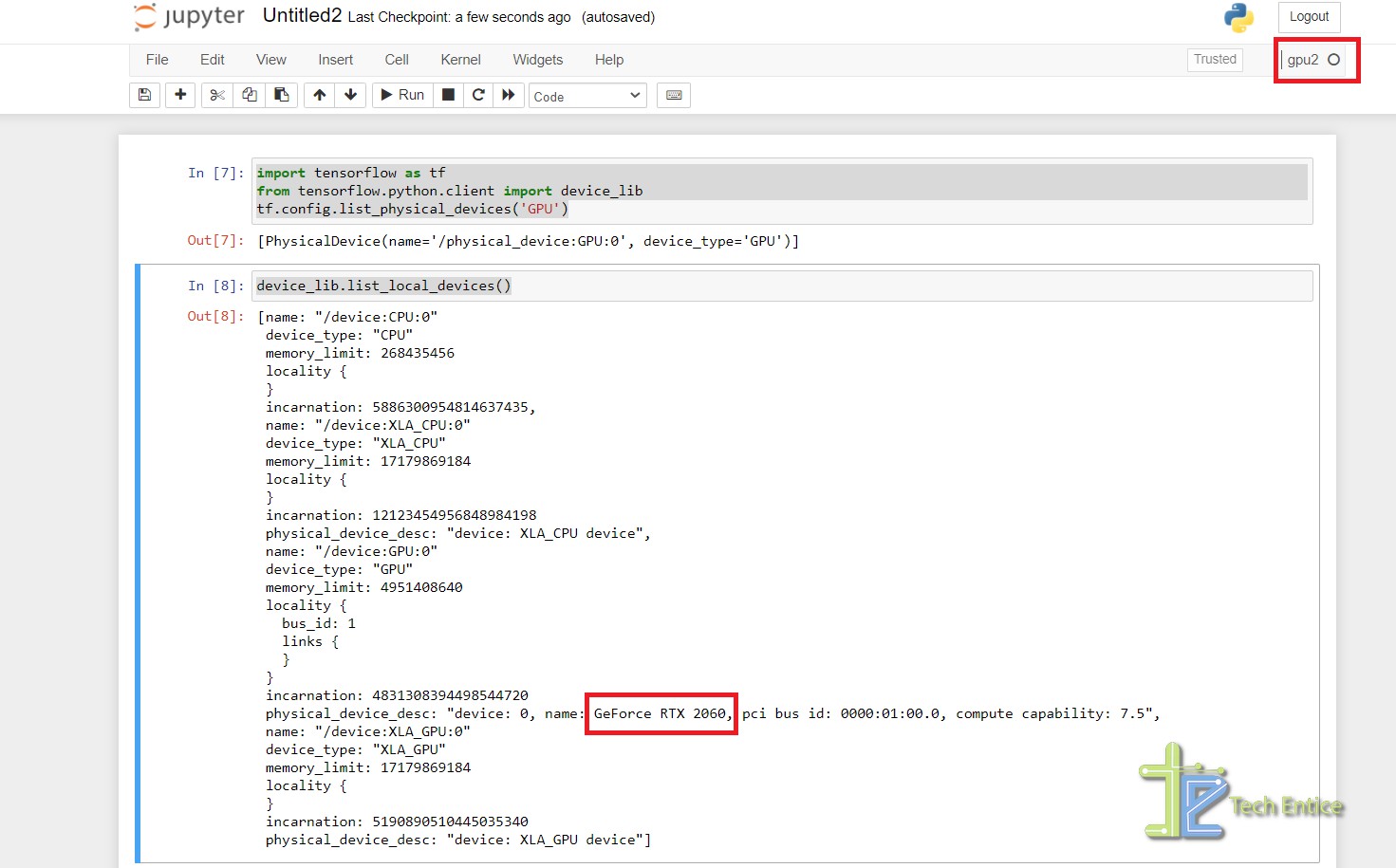

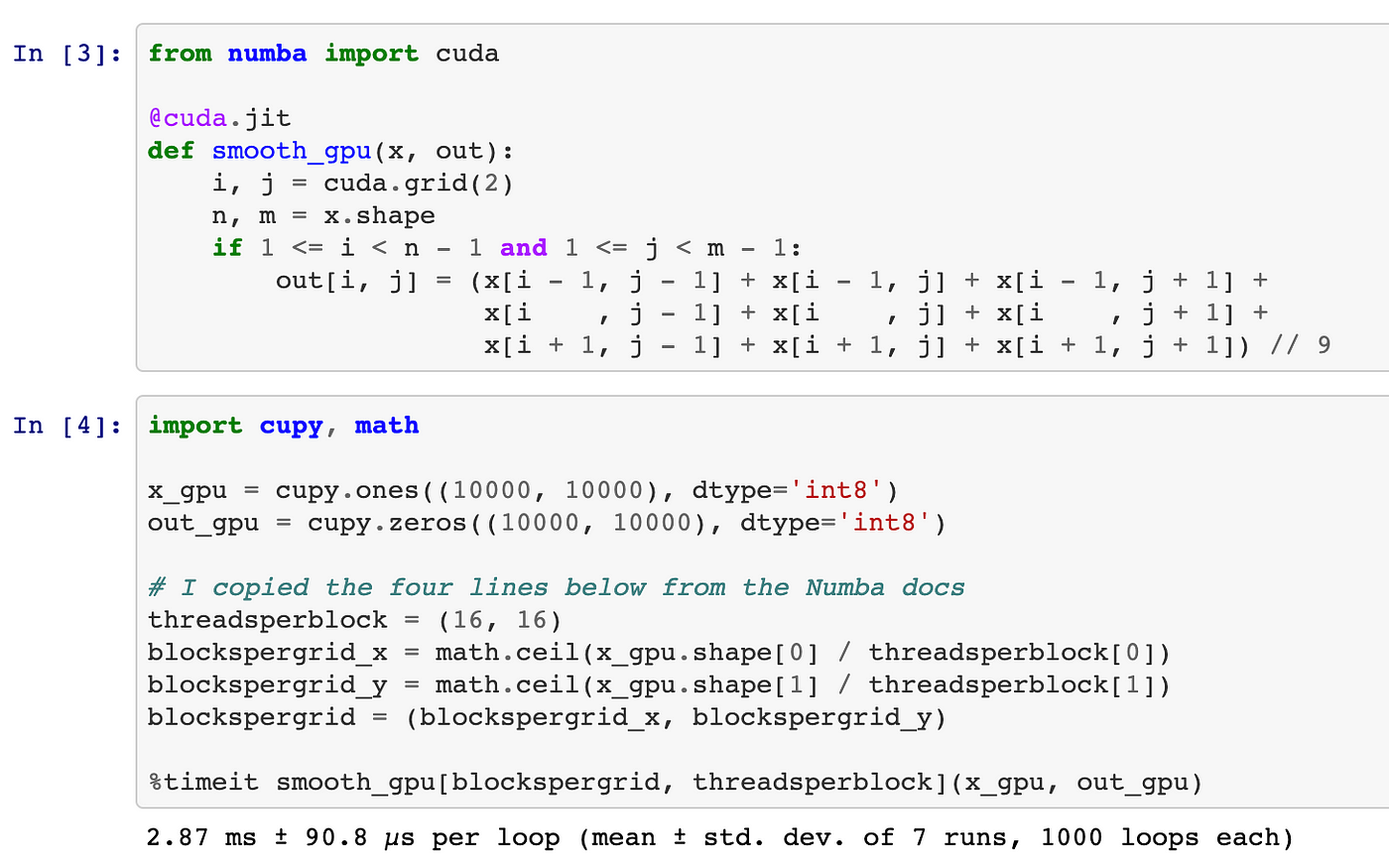

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium